Breaking Up with Pipelines: Why CtrlB’s On-Demand Ingestor Changes the Game

Aug 6, 2025

“Dear Pipelines,

It’s not me, it’s you. I can’t handle your rigidity anymore. You cost too much, and honestly, you never change. I’ve found someone new for on-demand ingest. They understand me, they scale with me, and they don’t drain me 24/7”.

Introduction

Extract-Transform-Load (ETL) pipelines have been the workhorse of data engineering for decades. In a typical ETL pipeline, data is extracted from various sources, transformed into a consistent format or schema, and then loaded into a database or index for analysis. This approach is widely used because it ensures data is structured and ready for queries, a reliable way to bring different kinds of data together, so you can analyze it more easily. Many organizations still rely on legacy ETL pipelines to collect logs and metrics, feeding them into search indexes or data warehouses so that engineers can query recent data quickly. After all, ETL was the standard solution for integrating multiple data sources and prepping data for BI reports or debugging dashboards. It’s familiar and time-tested. But as systems grow and requirements evolve, these traditional pipelines are showing their age.

The Limitations of Traditional ETL Pipelines

Even though ETL pipelines are everywhere, they still have big drawbacks. Let’s break down some of the biggest pain points that CTOs, SREs, and DevOps engineers face with always-on pipelines:

- Rigidity and Fragility: “Legacy ETL pipelines are brittle. A small change in log format or a new field often breaks them, forcing painful schema updates. If not updated in time, valuable data may be dropped without notice. In short, they don’t adapt well to change.

- High Latency: Because ETL jobs often run in batches or on fixed schedules, they introduce delays between data generation and availability. If you archive logs to cold storage, querying them can be slow and cumbersome, often involving hours-long batch jobs. In practice, this means slower root cause analysis and delayed insights when you need answers now, not tomorrow.

- Maintenance and Operational Overhead: ETL pipelines need constant upkeep. You have to maintain servers, scripts, databases, and clusters that run 24/7, burning resources even when no one is querying. Teams end up babysitting pipelines, fixing failures, and scaling infrastructure instead of building a product. As data grows, so does the complexity, often requiring a dedicated team just to keep it alive.

- Cost Inefficiency: Always-on pipelines are costly. You pay upfront to ingest, transform, and index everything, even if most logs are never touched. To save money, teams often keep only a few days of data ‘hot’ and push the rest to S3. But cold logs then become hard to reach, needing extra jobs to query. So either pay huge bills for full retention or save money but sacrifice quick access to history.

- Siloed and Inflexible Data: “Traditional pipelines weren’t built for modern observability. Logs, metrics, and traces end up siloed, making correlation hard. Once data is forced into a schema, it’s stuck; dropping fields or re-parsing means rewriting pipelines and reprocessing data. This rigidity makes it difficult to explore freely, while today’s teams need to ask ad-hoc questions and get answers without pre-planning every detail

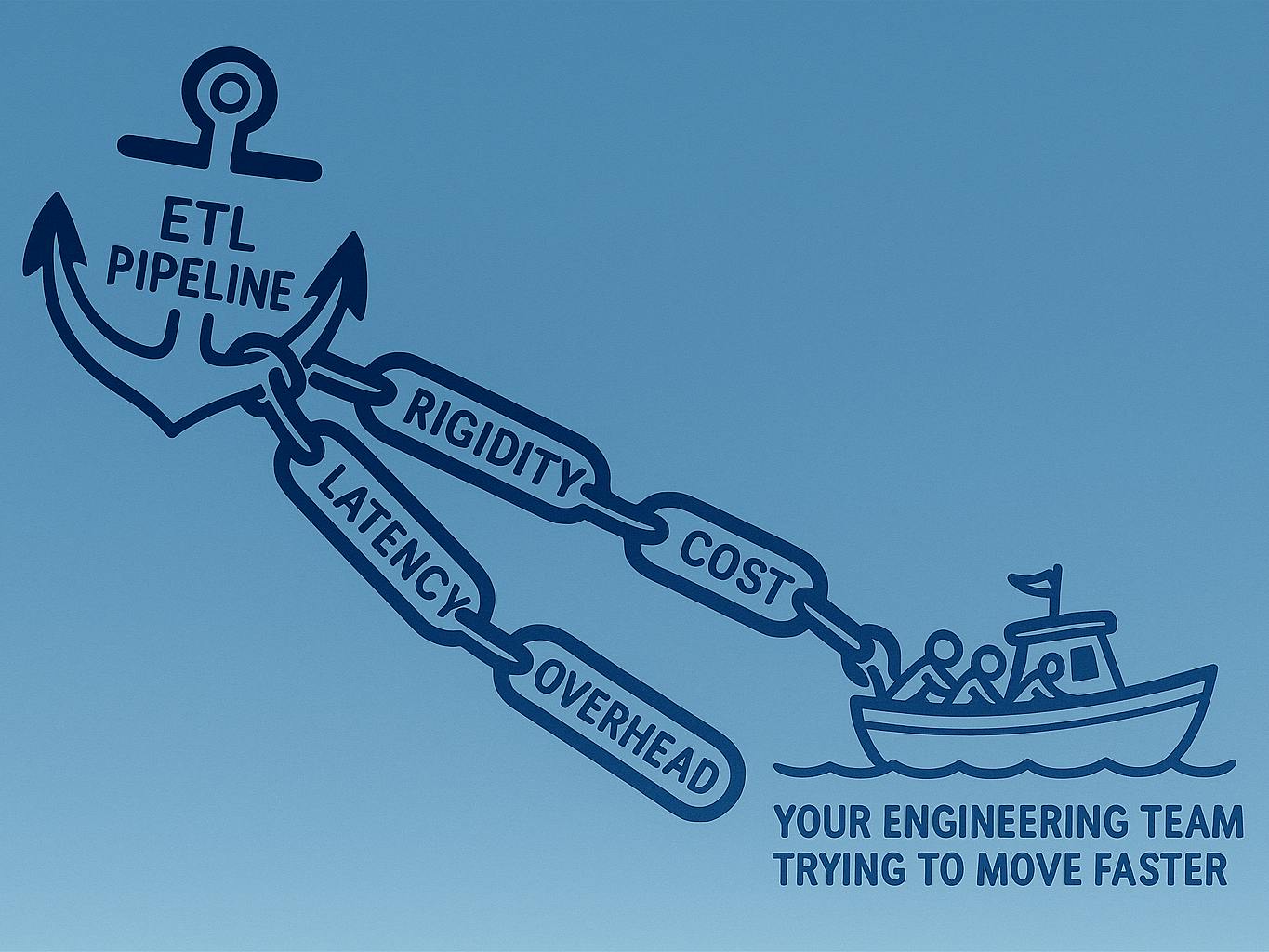

In summary, legacy ETL pipelines can feel like an anchor slowing you down, rigid, slow to update, complex to manage, and costly to scale. They served us well in the past, but today’s cloud-native, real-time world is exposing their cracks.

Meet CtrlB’s On-Demand Ingestor

Imagine if you could get rid of all that ETL baggage, no more constantly running pipelines, no more rigid schemas upfront, no more paying for infrastructure that sits idle. This is exactly the idea behind CtrlB’s on-demand ingestor. It flips the traditional model on its head: instead of collecting and indexing data before you ask a question. Here’s how it works and why it’s different:

- Storage in Cheap Object Stores: With CtrlB, you send all your logs and telemetry data straight to a durable, low-cost store (Amazon S3). Your raw data stays in object storage indefinitely if you want. This means you’re not paying for lots of SSD storage or running big databases just to keep data “hot.” All your logs (even from a year ago) can sit quietly in S3 until needed.

- Compute-On-Demand: When you want to search or analyze your data, CtrlB’s Ingestor spins up only when you run a query. In other words, there is no always-on processing happening in the background, burning money. The moment you hit “search”, CtrlB dynamically allocates compute power to read the relevant data from storage, parse it, and execute your query. Once you get your answer, that compute can spin back down. You’re not paying for idle servers or constantly running ETL jobs. This on-demand model is inherently more efficient.

- No Rigid Schemas Required: Because CtrlB defers the data processing until query time, you don’t have to predefine rigid schemas or parsing rules upfront. Logs are stored as-is. This dynamic approach means you can extract whichever fields you need on the fly. If your log format changes or a new field appears, nothing breaks; you just adjust your query to pull out the new information. There are no more “oops, we dropped that field in the pipeline” surprises. CtrlB’s schema-less ingestion ensures full-fidelity data is always available to explore.

- No More Indexing Pipeline Lag: CtrlB queries data directly from the source on demand. You could search yesterday’s, last week’s, or last year’s logs instantly without rehydrating archives. CtrlB was built to query logs in S3 directly without reingestion or complex reprocessing. The result is a more fluid experience: you ask and you receive, without worrying where the data lives or how old it is.

- Integrated Context and Correlation: Because the ingestor is part of a broader observability platform, CtrlB doesn’t just fetch raw log lines in isolation. It can also correlate logs with trace spans or service metadata. This means when you run a query, the system can pull in related traces or service context, giving you a rich, contextual answer (for example, tying an error log to the specific microservice and request that produced it). In a legacy setup, you might have to query logs in one system and traces in another & then mentally stitch them together. With CtrlB, no more bouncing between different tools or manually aligning timestamps, the context comes included, automatically.

In short, CtrlB’s on-demand ingestor is like having an ETL pipeline that only runs when you need it, exactly for what you need. You avoid the always-on waste and delay of legacy pipelines. By keeping data in a cheap lake and activating compute only for queries, you get the best of both worlds: retain everything but only pay when you actually use it. The architecture is inherently cloud-native, decoupling storage from compute & it aligns costs with usage. This is a fundamentally more elastic and scalable way to handle observability data.

Real-World Benefits: Efficiency, Flexibility, and Cost Savings

Theory is great, but how does this on-demand ingestion approach make a difference in practice? Here are a couple of real-world examples and use cases that show how CtrlB’s ingestor improves efficiency, flexibility, and cost for engineering teams:

- Cost Savings and Long-Term Retention: One early user of CtrlB’s platform was dealing with an avalanche of logs on the order of 57 TB of log data per week across their Kubernetes clusters. They could only afford to keep a few days of data in their “hot” index due to high ingestion and storage costs. Older logs were dumped to S3, essentially turning into cold, unsearchable data. In the past, when an incident required digging into week-old logs, the team had to run Athena queries or Spark jobs on the S3 archives, a process so slow (taking hours) that they often skipped it unless absolutely necessary. After adopting CtrlB, they moved to a much simpler model: log data goes straight to S3 and stays there, and the CtrlB on-demand engine handles queries whenever needed. The impact was huge; they cut their observability costs by over 70% while actually improving their ability to look back further in time and correlate issues across services. They saved money and got better visibility. By decoupling growth in data volume from skyrocketing costs, the team no longer had to agonize over what logs to keep or throw away.

- Flexibility and Resilience at Scale: The benefits of on-demand, schema-less ingestion aren’t just seen in small startups; even tech giants have recognized the need for this shift. For example, companies like Uber (with its massive microservice architecture) have gravitated toward schemaless logging to handle their scale. In traditional systems, any time a service team at Uber added a new field to their logs or changed a log format, the central pipeline could break or require a schema update. This was a huge bottleneck. With a deferred ingestion approach similar to CtrlB’s, Uber’s teams could let logs evolve freely; logs are stored as-is, allowing faster debugging and more resilient operations across hundreds of changing services. The lesson here is that flexibility is not a luxury at scale, it’s a necessity. On-demand ingestion gives you that flexibility by not hard-coding assumptions upfront. New service? New log field? No problem, the system adjusts on the fly. This leads to less firefighting for pipeline fixes and more time solving actual engineering problems.

- Operational Efficiency and Workflow Improvements: By removing the heavy lifting from the day-to-day, CtrlB’s approach also streamlines engineering workflows. By removing the heavy lifting from day-to-day tasks, CtrlB streamlines engineering workflows. Instead of spending hours maintaining pipelines or waiting on data, teams report big boosts in productivity and confidence with a unified, on-demand pipeline. As one CTO put it, “CtrlB’s intuitive interface made managing logs effortless, improving workflow efficiency”. Another called the ability to dynamically route different data types only when needed a “game-changer”, saving time and simplifying operations.

The takeaway is simple: with agile ingestion, engineers spend less time wrangling tools and more time solving real problems.

Conclusion: Rethink Your Pipeline (It’s Time for On-Demand)

It’s clear that running always-on ETL pipelines, rigid schemas, and paying heavy upfront costs is no longer the best way to handle observability data. CtrlB’s on-demand ingestor offers a modern alternative: flexible, fast, and cost-effective. By separating storage from compute and activating only when you query, it removes the waste and overhead of legacy pipelines.

You no longer have to choose between keeping all your logs and blowing out your budget, or throwing data away and hoping you won’t need it. With on-demand ingestion, every log is available when you need it, without the extra baggage.

For CTOs, SREs, and DevOps leaders, the takeaway is simple: flexibility matters most. Switching to an on-demand model boosts troubleshooting speed, cuts costs, and frees engineers from pipeline maintenance. If you’re still up late fixing broken jobs or cleaning up log indexes, maybe it’s time to ask: Is there a better way? The future of observability is arriving on demand; time to take the leap.